This application illustrates one common approach to time series prediction using a neural network. In this case, the output target for this network is a single time series. In general, the inputs to this network consist of lagged values of the time series together with other concomitant variables, both continuous and categorical. In this application, however, only the first three lags of the time series are used as network inputs.

The objective is to train a neural network for forecasting the series ![]() , from the first three lags of

, from the first three lags of ![]() , i.e.

, i.e.

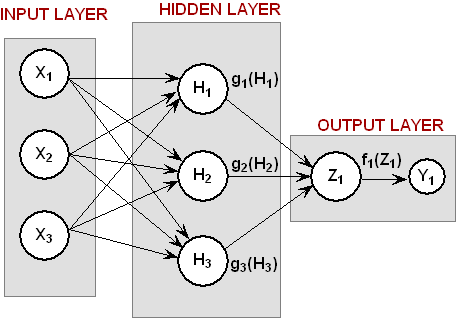

The structure of the network consists of three input nodes and two layers, with three perceptrons in the hidden layer and one in the output layer. The following figure depicts this structure:

There are a total of 16 weights in this network, including the 4 bias weights. All perceptrons in the hidden layer use logistic activation, and the output perceptron uses linear activation. Because of the large number of training patterns, the Activation.LogisticTable

activation funtion is used instead of Activation.Logistic

. Activation.LogisticTable

uses a table lookup for calculating the logistic activation function, which significantly reduces training time. However, these are not completely interchangable. If a network is trained using Activation.LogisticTable

, then it is important to use the same activation function for forecasting.

All input nodes are linked to every perceptron in the hidden layer, which are in turn linked to the output perceptron. Then all inputs and the output target are scaled using the ScaleFilter

class to ensure that all input values and outputs are in the range [0, 1]. This requires forecasts to be unscaled using the Decode()

method of the ScaleFilter

class.

Training is conducted using the epoch trainer. This trainer allows users to customize training into two stages. Typically this is necessary when training using a large number of training patterns. Stage I training uses randomly selected subsets of training patterns to search for network solutions. Stage II training is optional, and uses the entire set of training patterns. For larger sets of training patterns, training could take many hours, or even days. In that case, Stage II training might be bypassed.

In this example, Stage I training is conducted using the quasi-Newton trainer applied to 20 epochs, each consisting of 5,000 randomly selected observations. Stage II training also uses the quasi-Newton trainer.

The training patterns are contained in two data files: continuous.txt

and output.txt

. The formats of these files are identical. The first line of the file contains the number of columns or variables in that file. The second contains a line of tab-delimited integer values. These are the column indices associated with the incoming data. The remaining lines contain tab-delimited, floating point values, one for each of the incoming variables.

For example, the first four lines of the continuous.txt

file consists of the following lines:

3

1 2 3

0 0 0

0 0 0

There are 3 continuous input variables which are numbered, or labeled, as 1, 2, and 3.

using System;

using Imsl.DataMining.Neural;

using Imsl.Math;

using System.Runtime.Serialization;

using System.Runtime.Serialization.Formatters.Binary;

//*****************************************************************************

// NeuralNetworkEx1.java *

// Two Layer Feed-Forward Network Complete Example for Simple Time Series *

//*****************************************************************************

// Synopsis: This example illustrates how to use a Feed-Forward Neural *

// Network to forecast time series data. The network target is a *

// time series and the three inputs are the 1st, 2nd, and 3rd lag *

// for the target series. *

// Activation: Logistic_Table in Hidden Layer, Linear in Output Layer *

// Trainer: Epoch Trainer: Stage I - Quasi-Newton, Stage II - Quasi-Newton *

// Inputs: Lags 1-3 of the time series *

// Output: A Time Series sorted chronologically in descending order, *

// i.e., the most recent observations occur before the earliest, *

// within each department *

//*****************************************************************************

//[Serializable]

public class NeuralNetworkEx1 //: System.Runtime.Serialization.ISerializable

{

private static System.String QuasiNewton = "quasi-newton";

private static System.String LeastSquares = "least-squares";

// *************************************************************************

// Network Architecture *

// *************************************************************************

private static int nObs = 118519; // number of training patterns

private static int nInputs = 3; // four inputs

private static int nContinuous = 3; // one continuous input attribute

private static int nOutputs = 1; // one continuous output

private static int nPerceptrons = 3; // perceptrons in hidden layer

private static int[] perceptrons = new int[]{3}; // # of perceptrons in each

// hidden layer

// PERCEPTRON ACTIVATION

private static IActivation hiddenLayerActivation;

private static IActivation outputLayerActivation;

// *************************************************************************

// Epoch Training Optimization Settings *

// *************************************************************************

private static bool trace = true; //trainer logging *

private static int nEpochs = 20; //number of epochs *

private static int epochSize = 5000; //samples per epoch *

// Stage I Trainer - Quasi-Newton Trainer **********************************

private static int stage1Iterations = 5000; //max. iterations *

private static double stage1StepTolerance = 1e-09; //step tolerance *

private static double stage1RelativeTolerance = 1e-11; //rel. tolerance *

// Stage II Trainer - Quasi-Newton Trainer *********************************

private static int stage2Iterations = 5000; //max. iterations *

private static double stage2StepTolerance = 1e-09; //step tolerance *

private static double stage2RelativeTolerance = 1e-11; //rel. tolerance *

// *************************************************************************

// FILE NAMES AND FILE READER DEFINITIONS *

// *************************************************************************

// READERS

private static System.IO.StreamReader contFileInputStream;

private static System.IO.StreamReader outputFileInputStream;

// OUTPUT FILES

// File Name for Serialized Network

private static System.String networkFileName = "NeuralNetworkEx1.ser";

// File Name for Serialized Trainer

private static System.String trainerFileName = "NeuralNetworkTrainerEx1.ser";

// File Name for Serialized xData File (training input attributes)

private static System.String xDataFileName = "NeuralNetworkxDataEx1.ser";

// File Name for Serialized yData File (training output targets)

private static System.String yDataFileName = "NeuralNetworkyDataEx1.ser";

// INPUT FILES

// Continuous input attributes file. File contains Lags 1-3 of series

private static System.String contFileName = "continuous.txt";

// Continuous network targets file. File contains the original series

private static System.String outputFileName = "output.txt";

// *************************************************************************

// Data Preprocessing Settings *

// *************************************************************************

private static double lowerDataLimit = - 105000; // lower scale limit

private static double upperDataLimit = 25000000; // upper scale limit

// indicator

// *************************************************************************

// Time Parameters for Tracking Training Time *

// *************************************************************************

private static int startTime;

// *************************************************************************

// Error Message Encoding for Stage II Trainer - Quasi-Newton Trainer *

// *************************************************************************

// Note: For the Epoch Trainer, the error status returned is the status for*

// the Stage II trainer, unless Stage II training is not used. *

// *************************************************************************

private static System.String errorMsg = "";

// Error Status Messages for the Quasi-Newton Trainer

private static System.String errorMsg0 = "--> Network Training";

private static System.String errorMsg1 =

"--> The last global step failed to locate a lower point than the\n" +

"current error value. The current solution may be an approximate\n" +

"solution and no more accuracy is possible, or the step tolerance\n" +

"may be too large.";

private static System.String errorMsg2 =

"--> Relative function convergence; both both the actual and \n" +

"predicted relative reductions in the error function are less than\n" +

"or equal to the relative fu nction convergence tolerance.";

private static System.String errorMsg3 =

"--> Scaled step tolerance satisfied; the current solution may be\n" +

"an approximate local solution, or the algorithm is making very slow\n" +

"progress and is not near a solution, or the step tolerance is too big.";

private static System.String errorMsg4 =

"--> Quasi-Newton Trainer threw a \n" +

"MinUnconMultiVar.FalseConvergenceException.";

private static System.String errorMsg5 =

"--> Quasi-Newton Trainer threw a \n" +

"MinUnconMultiVar.MaxIterationsException.";

private static System.String errorMsg6 =

"--> Quasi-Newton Trainer threw a \n" +

"MinUnconMultiVar.UnboundedBelowException.";

// *************************************************************************

// MAIN *

// *************************************************************************

public static void Main(System.String[] args)

{

double[] weight; // Network weights

double[] gradient; // Network gradient after training

double[,] xData; // Training Patterns Input Attributes

double[,] yData; // Training Targets Output Attributes

double[,] contAtt; // A 2D matrix for the continuous training attributes

double[,] outs; // A matrix containing the training output tragets

int i, j, m = 0; // Array indicies

int nWeights = 0; // Number of network weights

int nCol = 0; // Number of data columns in input file

int[] ignore; // Array of 0's and 1's (0=missing value)

int[] cont_col, outs_col, isMissing = new int[]{0};

//System.String inputLine = "", temp;

//System.String[] dataElement;

// **********************************************************************

// Initialize timers *

// **********************************************************************

NeuralNetworkEx1.startTime =

DateTime.Now.Hour * 60 * 60 * 1000 +

DateTime.Now.Minute * 60 * 1000 +

DateTime.Now.Second * 1000 +

DateTime.Now.Millisecond;

System.Console.Out.WriteLine("--> Starting Data Preprocessing at: " +

startTime.ToString());

// **********************************************************************

// Read continuous attribute data *

// **********************************************************************

// Initialize ignore[] for identifying missing observations

ignore = new int[nObs];

isMissing = new int[1];

openInputFiles();

nCol = readFirstLine(contFileInputStream);

nContinuous = nCol;

System.Console.Out.WriteLine("--> Number of continuous variables: " +

nContinuous);

// If the number of continuous variables is greater than zero then read

// the remainder of this file (contFile)

if (nContinuous > 0)

{

// contFile contains continuous attribute data

contAtt = new double[nObs, nContinuous];

double[] _contAttRow = new double[nContinuous];

// for (int i2 = 0; i2 < nObs; i2++)

// {

// contAtt[i2] = new double[nContinuous];

// }

cont_col = readColumnLabels(contFileInputStream, nContinuous);

for (i = 0; i < nObs; i++)

{

isMissing[0] = - 1;

_contAttRow = readDataLine(contFileInputStream, nContinuous,

isMissing);

for (int jj=0; jj < nContinuous; jj++)

{

contAtt[i,jj] = _contAttRow[jj];

}

ignore[i] = isMissing[0];

if (isMissing[0] >= 0)

m++;

}

}

else

{

nContinuous = 0;

contAtt = new double[1,1];

// for (int i3 = 0; i3 < 1; i3++)

// {

// contAtt[i3] = new double[1];

// }

contAtt[0,0] = 0;

}

closeFile(contFileInputStream);

// **********************************************************************

// Read continuous output targets *

// **********************************************************************

nCol = readFirstLine(outputFileInputStream);

nOutputs = nCol;

System.Console.Out.WriteLine("--> Number of output variables: " +

nOutputs);

outs = new double[nObs, nOutputs];

double[] _outsRow = new double[nOutputs];

// for (int i4 = 0; i4 < nObs; i4++)

// {

// outs[i4] = new double[nOutputs];

// }

// Read numeric labels for continuous input attributes

outs_col = readColumnLabels(outputFileInputStream, nOutputs);

m = 0;

for (i = 0; i < nObs; i++)

{

isMissing[0] = ignore[i];

_outsRow = readDataLine(outputFileInputStream, nOutputs, isMissing);

for (int jj =0; jj < nOutputs; jj++)

{

outs[i, jj] = _outsRow[jj];

}

ignore[i] = isMissing[0];

if (isMissing[0] >= 0)

m++;

}

System.Console.Out.WriteLine("--> Number of Missing Observations: "

+ m);

closeFile(outputFileInputStream);

// Remove missing observations using the ignore[] array

m = removeMissingData(nObs, nContinuous, ignore, contAtt);

m = removeMissingData(nObs, nOutputs, ignore, outs);

System.Console.Out.WriteLine("--> Total Number of Training Patterns: "

+ nObs);

nObs = nObs - m;

System.Console.Out.WriteLine("--> Number of Usable Training Patterns: "

+ nObs);

// **********************************************************************

// Setup Method and Bounds for Scale Filter *

// **********************************************************************

ScaleFilter scaleFilter = new ScaleFilter(

ScaleFilter.ScalingMethod.Bounded);

scaleFilter.SetBounds(lowerDataLimit, upperDataLimit, 0, 1);

// **********************************************************************

// PREPROCESS TRAINING PATTERNS *

// **********************************************************************

System.Console.Out.WriteLine(

"--> Starting Preprocessing of Training Patterns");

xData = new double[nObs, nContinuous];

// for (int i5 = 0; i5 < nObs; i5++)

// {

// xData[i5] = new double[nContinuous];

// }

yData = new double[nObs, nOutputs];

// for (int i6 = 0; i6 < nObs; i6++)

// {

// yData[i6] = new double[nOutputs];

// }

for (i = 0; i < nObs; i++)

{

for (j = 0; j < nContinuous; j++)

{

xData[i,j] = contAtt[i,j];

}

yData[i,0] = outs[i,0];

}

scaleFilter.Encode(0, xData);

scaleFilter.Encode(1, xData);

scaleFilter.Encode(2, xData);

scaleFilter.Encode(0, yData);

// **********************************************************************

// CREATE FEEDFORWARD NETWORK *

// **********************************************************************

System.Console.Out.WriteLine("--> Creating Feed Forward Network Object");

FeedForwardNetwork network = new FeedForwardNetwork();

// setup input layer with number of inputs = nInputs = 3

network.InputLayer.CreateInputs(nInputs);

// create a hidden layer with nPerceptrons=3 perceptrons

network.CreateHiddenLayer().CreatePerceptrons(nPerceptrons);

// create output layer with nOutputs=1 output perceptron

network.OutputLayer.CreatePerceptrons(nOutputs);

// link all inputs and perceptrons to all perceptrons in the next layer

network.LinkAll();

// Get Network Perceptrons for Setting Their Activation Functions

Perceptron[] perceptrons = network.Perceptrons;

// Set all hidden layer perceptrons to logistic_table activation

for (i = 0; i < perceptrons.Length - 1; i++)

{

perceptrons[i].Activation = hiddenLayerActivation;

}

perceptrons[perceptrons.Length - 1].Activation = outputLayerActivation;

System.Console.Out.WriteLine(

"--> Feed Forward Network Created with 2 Layers");

// **********************************************************************

// TRAIN NETWORK USING EPOCH TRAINER *

// **********************************************************************

System.Console.Out.WriteLine("--> Training Network using Epoch Trainer");

ITrainer trainer = createTrainer(QuasiNewton, QuasiNewton);

startTime =

DateTime.Now.Hour * 60 * 60 * 1000 +

DateTime.Now.Minute * 60 * 1000 +

DateTime.Now.Second * 1000 +

DateTime.Now.Millisecond;

// Train Network

trainer.Train(network, xData, yData);

// Check Training Error Status

switch (trainer.ErrorStatus)

{

case 0: errorMsg = errorMsg0;

break;

case 1: errorMsg = errorMsg1;

break;

case 2: errorMsg = errorMsg2;

break;

case 3: errorMsg = errorMsg3;

break;

case 4: errorMsg = errorMsg4;

break;

case 5: errorMsg = errorMsg5;

break;

case 6: errorMsg = errorMsg6;

break;

default: errorMsg = "--> Unknown Error Status Returned from Trainer";

break;

}

System.Console.Out.WriteLine(errorMsg);

int currentTimeNow =

DateTime.Now.Hour * 60 * 60 * 1000 +

DateTime.Now.Minute * 60 * 1000 +

DateTime.Now.Second * 1000 +

DateTime.Now.Millisecond;

System.Console.Out.WriteLine("--> Network Training Completed at: " +

currentTimeNow.ToString());

double duration = (double) (currentTimeNow - startTime) / 1000.0;

System.Console.Out.WriteLine("--> Training Time: " + duration +

" seconds");

// **********************************************************************

// DISPLAY TRAINING STATISTICS *

// **********************************************************************

double[] stats = network.ComputeStatistics(xData, yData);

// Display Network Errors

System.Console.Out.WriteLine(

"***********************************************");

System.Console.Out.WriteLine("--> SSE: " +

(float)stats[0]);

System.Console.Out.WriteLine("--> RMS: " +

(float)stats[1]);

System.Console.Out.WriteLine("--> Laplacian Error: " +

(float)stats[2]);

System.Console.Out.WriteLine("--> Scaled Laplacian Error: " +

(float)stats[3]);

System.Console.Out.WriteLine("--> Largest Absolute Residual: " +

(float)stats[4]);

System.Console.Out.WriteLine(

"***********************************************");

System.Console.Out.WriteLine("");

// **********************************************************************

// OBTAIN AND DISPLAY NETWORK WEIGHTS AND GRADIENTS *

// **********************************************************************

System.Console.Out.WriteLine("--> Getting Network Weights and Gradients");

// Get weights

weight = network.Weights;

// Get number of weights = number of gradients

nWeights = network.NumberOfWeights;

// Obtain Gradient Vector

gradient = trainer.ErrorGradient;

// Print Network Weights and Gradients

System.Console.Out.WriteLine(" ");

System.Console.Out.WriteLine("--> Network Weights and Gradients:");

System.Console.Out.WriteLine(

"***********************************************");

double[,] printMatrix = new double[nWeights,2];

// for (int i7 = 0; i7 < nWeights; i7++)

// {

// printMatrix[i7] = new double[2];

// }

for (i = 0; i < nWeights; i++)

{

printMatrix[i,0] = weight[i];

printMatrix[i,1] = gradient[i];

}

// Print result without row/column labels.

System.String[] colLabels = new System.String[]{"Weight", "Gradient"};

PrintMatrix pm = new PrintMatrix();

PrintMatrixFormat mf;

mf = new PrintMatrixFormat();

mf.SetNoRowLabels();

mf.SetColumnLabels(colLabels);

pm.SetTitle("Weights and Gradients");

pm.Print(mf, printMatrix);

System.Console.Out.WriteLine(

"***********************************************");

// **********************************************************************

// SAVE THE TRAINED NETWORK BY SAVING THE SERIALIZED NETWORK OBJECT *

// **********************************************************************

System.Console.Out.WriteLine("\n--> Saving Trained Network into " +

networkFileName);

write(network, networkFileName);

System.Console.Out.WriteLine("--> Saving Network Trainer into " +

trainerFileName);

write(trainer, trainerFileName);

System.Console.Out.WriteLine("--> Saving xData into " + xDataFileName);

write(xData, xDataFileName);

System.Console.Out.WriteLine("--> Saving yData into " + yDataFileName);

write(yData, yDataFileName);

}

// *************************************************************************

// OPEN DATA FILES *

// *************************************************************************

static public void openInputFiles()

{

try

{

// Continuous Input Attributes

System.IO.Stream contInputStream = new System.IO.FileStream(

contFileName, System.IO.FileMode.Open, System.IO.FileAccess.Read);

contFileInputStream = new System.IO.StreamReader(new

System.IO.StreamReader(contInputStream).BaseStream,

System.Text.Encoding.UTF7);

// Continuous Output Targets

System.IO.Stream outputInputStream = new System.IO.FileStream(

outputFileName, System.IO.FileMode.Open, System.IO.FileAccess.Read);

outputFileInputStream = new System.IO.StreamReader(

new System.IO.StreamReader(outputInputStream).BaseStream,

System.Text.Encoding.UTF7);

}

catch (System.Exception e)

{

System.Console.Out.WriteLine("-->ERROR: " + e);

System.Environment.Exit(0);

}

}

// *************************************************************************

// READ FIRST LINE OF DATA FILE AND RETURN NUMBER OF COLUMNS IN FILE *

// *************************************************************************

static public int readFirstLine(System.IO.StreamReader inputFile)

{

System.String inputLine = "", temp;

int nCol = 0;

try

{

temp = inputFile.ReadLine();

inputLine = temp.Trim();

nCol = System.Int32.Parse(inputLine);

}

catch (System.Exception e)

{

System.Console.Out.WriteLine("--> ERROR READING 1st LINE OF File" + e);

System.Environment.Exit(0);

}

return nCol;

}

// *************************************************************************

// READ COLUMN LABELS (2ND LINE IN FILE) *

// *************************************************************************

static public int[] readColumnLabels(System.IO.StreamReader inputFile,

int nCol)

{

int[] contCol = new int[nCol];

System.String inputLine = "", temp;

System.String[] dataElement;

// Read numeric labels for continuous input attributes

try

{

temp = inputFile.ReadLine();

inputLine = temp.Trim();

}

catch (System.Exception e)

{

System.Console.Out.WriteLine("--> ERROR READING 2nd LINE OF FILE: "

+ e);

System.Environment.Exit(0);

}

dataElement = inputLine.Split(new Char[] {' '});

for (int i = 0; i < nCol; i++)

{

contCol[i] = System.Int32.Parse(dataElement[i]);

}

return contCol;

}

// *************************************************************************

// READ DATA ROW *

// *************************************************************************

static public double[] readDataLine(System.IO.StreamReader inputFile,

int nCol, int[] isMissing)

{

double missingValueIndicator = - 9999999999.0;

double[] dataLine = new double[nCol];

double[] contCol = new double[nCol];

System.String inputLine = "", temp;

System.String[] dataElement;

try

{

temp = inputFile.ReadLine();

inputLine = temp.Trim();

}

catch (System.Exception e)

{

System.Console.Out.WriteLine("-->ERROR READING LINE: " + e);

System.Environment.Exit(0);

}

dataElement = inputLine.Split(new Char[] {' '});

for (int j = 0; j < nCol; j++)

{

dataLine[j] = System.Double.Parse(dataElement[j]);

if (dataLine[j] == missingValueIndicator)

isMissing[0] = 1;

}

return dataLine;

}

// *************************************************************************

// CLOSE FILE *

// *************************************************************************

static public void closeFile(System.IO.StreamReader inputFile)

{

try

{

inputFile.Close();

}

catch (System.Exception e)

{

System.Console.Out.WriteLine("ERROR: Unable to close file: " + e);

System.Environment.Exit(0);

}

}

// *************************************************************************

// REMOVE MISSING DATA *

// *************************************************************************

// Now remove all missing data using the ignore[] array

// and recalculate the number of usable observations, nObs

// This method is inefficient, but it works. It removes one case at a

// time, starting from the bottom. As a case (row) is removed, the cases

// below are pushed up to take it's place.

// *************************************************************************

static public int removeMissingData(int nObs, int nCol, int[] ignore,

double[,] inputArray)

{

int m = 0;

for (int i = nObs - 1; i >= 0; i--)

{

if (ignore[i] >= 0)

{

// the ith row contains a missing value

// remove the ith row by shifting all rows below the

// ith row up by one position, e.g. row i+1 -> row i

m++;

if (nCol > 0)

{

for (int j = i; j < nObs - m; j++)

{

for (int k = 0; k < nCol; k++)

{

inputArray[j,k] = inputArray[j + 1,k];

}

}

}

}

}

return m;

}

// *************************************************************************

// Create Stage I/Stage II Trainer *

// *************************************************************************

static public ITrainer createTrainer(System.String s1, System.String s2)

{

EpochTrainer epoch = null; // Epoch Trainer (returned by this method)

QuasiNewtonTrainer stage1Trainer; // Stage I Quasi-Newton Trainer

QuasiNewtonTrainer stage2Trainer; // Stage II Quasi-Newton Trainer

LeastSquaresTrainer stage1LS; // Stage I Least Squares Trainer

LeastSquaresTrainer stage2LS; // Stage II Least Squares Trainer

int currentTimeNow; // Calendar time tracker

// Create Epoch (Stage I/Stage II) trainer from above trainers.

System.Console.Out.WriteLine(" --> Creating Epoch Trainer");

if (s1.Equals(QuasiNewton))

{

// Setup stage I quasi-newton trainer

stage1Trainer = new QuasiNewtonTrainer();

//stage1Trainer.setMaximumStepsize(maxStepSize);

stage1Trainer.MaximumTrainingIterations = stage1Iterations;

stage1Trainer.StepTolerance = stage1StepTolerance;

if (s2.Equals(QuasiNewton))

{

stage2Trainer = new QuasiNewtonTrainer();

//stage2Trainer.setMaximumStepsize(maxStepSize);

stage2Trainer.MaximumTrainingIterations = stage2Iterations;

epoch = new EpochTrainer(stage1Trainer, stage2Trainer);

}

else

{

if (s2.Equals(LeastSquares))

{

stage2LS = new LeastSquaresTrainer();

stage2LS.InitialTrustRegion = 1.0e-3;

//stage2LS.setMaximumStepsize(maxStepSize);

stage2LS.MaximumTrainingIterations = stage2Iterations;

epoch = new EpochTrainer(stage1Trainer, stage2LS);

}

else

{

epoch = new EpochTrainer(stage1Trainer);

}

}

}

else

{

// Setup stage I least squares trainer

stage1LS = new LeastSquaresTrainer();

stage1LS.InitialTrustRegion = 1.0e-3;

stage1LS.MaximumTrainingIterations = stage1Iterations;

//stage1LS.setMaximumStepsize(maxStepSize);

if (s2.Equals(QuasiNewton))

{

stage2Trainer = new QuasiNewtonTrainer();

//stage2Trainer.setMaximumStepsize(maxStepSize);

stage2Trainer.MaximumTrainingIterations = stage2Iterations;

epoch = new EpochTrainer(stage1LS, stage2Trainer);

}

else

{

if (s2.Equals(LeastSquares))

{

stage2LS = new LeastSquaresTrainer();

stage2LS.InitialTrustRegion = 1.0e-3;

//stage2LS.setMaximumStepsize(maxStepSize);

stage2LS.MaximumTrainingIterations = stage2Iterations;

epoch = new EpochTrainer(stage1LS, stage2LS);

}

else

{

epoch = new EpochTrainer(stage1LS);

}

}

}

epoch.NumberOfEpochs = nEpochs;

epoch.EpochSize = epochSize;

epoch.Random = new Imsl.Stat.Random(1234567);

epoch.SetRandomSamples(new Imsl.Stat.Random(12345),

new Imsl.Stat.Random(67891));

System.Console.Out.WriteLine(" --> Trainer: Stage I - " + s1 +

" Stage II " + s2);

System.Console.Out.WriteLine(" --> Number of Epochs: " + nEpochs);

System.Console.Out.WriteLine(" --> Epoch Size: " + epochSize);

// Describe optimization setup for Stage I training

System.Console.Out.WriteLine(" --> Creating Stage I Trainer");

System.Console.Out.WriteLine(" --> Stage I Iterations: " +

stage1Iterations);

System.Console.Out.WriteLine(" --> Stage I Step Tolerance: " +

stage1StepTolerance);

System.Console.Out.WriteLine(" --> Stage I Relative Tolerance: " +

stage1RelativeTolerance);

System.Console.Out.WriteLine(" --> Stage I Step Size: " +

"DEFAULT");

System.Console.Out.WriteLine(" --> Stage I Trace: " +

trace);

if (s2.Equals(QuasiNewton) || s2.Equals(LeastSquares))

{

// Describe optimization setup for Stage II training

System.Console.Out.WriteLine(" --> Creating Stage II Trainer");

System.Console.Out.WriteLine(" --> Stage II Iterations: " +

stage2Iterations);

System.Console.Out.WriteLine(" --> Stage II Step Tolerance: " +

stage2StepTolerance);

System.Console.Out.WriteLine(" --> Stage II Relative Tolerance: " +

stage2RelativeTolerance);

System.Console.Out.WriteLine(" --> Stage II Step Size: " +

"DEFAULT");

System.Console.Out.WriteLine(" --> Stage II Trace: " +

trace);

}

currentTimeNow =

DateTime.Now.Hour * 60 * 60 * 1000 +

DateTime.Now.Minute * 60 * 1000 +

DateTime.Now.Second * 1000 +

DateTime.Now.Millisecond;

System.Console.Out.WriteLine("--> Starting Network Training at " +

currentTimeNow.ToString());

// Return Stage I/Stage II trainer

return epoch;

}

// *************************************************************************

// WRITE SERIALIZED OBJECT TO A FILE *

// *************************************************************************

static public void write(System.Object obj, System.String filename)

{

System.IO.FileStream fos = new System.IO.FileStream(filename,

System.IO.FileMode.Create);

IFormatter oos = new BinaryFormatter();

oos.Serialize(fos, obj);

fos.Close();

}

static NeuralNetworkEx1()

{

hiddenLayerActivation = Imsl.DataMining.Neural.Activation.LogisticTable;

outputLayerActivation = Imsl.DataMining.Neural.Activation.Linear;

}

}

// *****************************************************************************

--> Starting Data Preprocessing at: 44821683

--> Number of continuous variables: 3

--> Number of output variables: 1

--> Number of Missing Observations: 16507

--> Total Number of Training Patterns: 118519

--> Number of Usable Training Patterns: 102012

--> Starting Preprocessing of Training Patterns

--> Creating Feed Forward Network Object

--> Feed Forward Network Created with 2 Layers

--> Training Network using Epoch Trainer

--> Creating Epoch Trainer

--> Trainer: Stage I - quasi-newton Stage II quasi-newton

--> Number of Epochs: 20

--> Epoch Size: 5000

--> Creating Stage I Trainer

--> Stage I Iterations: 5000

--> Stage I Step Tolerance: 1E-09

--> Stage I Relative Tolerance: 1E-11

--> Stage I Step Size: DEFAULT

--> Stage I Trace: True

--> Creating Stage II Trainer

--> Stage II Iterations: 5000

--> Stage II Step Tolerance: 1E-09

--> Stage II Relative Tolerance: 1E-11

--> Stage II Step Size: DEFAULT

--> Stage II Trace: True

--> Starting Network Training at 45070408

--> The last global step failed to locate a lower point than the

current error value. The current solution may be an approximate

solution and no more accuracy is possible, or the step tolerance

may be too large.

--> Network Training Completed at: 52311842

--> Training Time: 7241.434 seconds

***********************************************

--> SSE: 4.49772

--> RMS: 0.1423779

--> Laplacian Error: 103.4631

--> Scaled Laplacian Error: 0.1707173

--> Largest Absolute Residual: 0.4921748

***********************************************

--> Getting Network Weights and Gradients

--> Network Weights and Gradients:

***********************************************

Weights and Gradients

Weight Gradient

-248.425149158357 -9.50818419128144E-05

-4.01301691047852 -9.08459022567118E-07

248.602873209042 -2.84623837579401E-05

258.622104579914 -8.49451049786515E-05

0.125785905718184 -7.51083204612989E-07

-258.811023180973 -2.81816574426092E-05

-394.380943852438 -0.000125916731945308

-0.356726621727131 -5.25467092773031E-07

394.428311058654 -2.70798222353788E-05

422.855858784789 -1.40339989032276E-06

-1.01024906891467 -8.54119524733673E-07

422.854960914701 3.37315953950526E-08

91.0301743864326 -0.000555459860183764

0.672279284955327 -3.11957565142863E-06

-91.0431760187523 -0.000120208750794691

-422.186774012951 -1.36686903761535E-06

***********************************************

--> Saving Trained Network into NeuralNetworkEx1.ser

--> Saving Network Trainer into NeuralNetworkTrainerEx1.ser

--> Saving xData into NeuralNetworkxDataEx1.ser

--> Saving yData into NeuralNetworkyDataEx1.ser

The above output indicates that the network successfully completed its training. The final sum of squared errors was 3.88, and the RMS (the scaled version of the sum of squared errors) was 0.12. All of the gradients at this solution are nearly zero, which is expected if network training found a local or global optima. Non-zero gradients usually indicate there was a problem with network training.

--> Starting Data Preprocessing at: 52784832

--> Number of continuous variables: 3

--> Number of output variables: 1

--> Number of Missing Observations: 16507

--> Total Number of Training Patterns: 118519

--> Number of Usable Training Patterns: 102012

--> Starting Preprocessing of Training Patterns

--> Creating Feed Forward Network Object

--> Feed Forward Network Created with 2 Layers

--> Training Network using Epoch Trainer

--> Creating Epoch Trainer

--> Trainer: Stage I - quasi-newton Stage II quasi-newton

--> Number of Epochs: 20

--> Epoch Size: 5000

--> Creating Stage I Trainer

--> Stage I Iterations: 5000

--> Stage I Step Tolerance: 1E-09

--> Stage I Relative Tolerance: 1E-11

--> Stage I Step Size: DEFAULT

--> Stage I Trace: True

--> Creating Stage II Trainer

--> Stage II Iterations: 5000

--> Stage II Step Tolerance: 1E-09

--> Stage II Relative Tolerance: 1E-11

--> Stage II Step Size: DEFAULT

--> Stage II Trace: True

--> Starting Network Training at 52801192

--> The last global step failed to locate a lower point than the

current error value. The current solution may be an approximate

solution and no more accuracy is possible, or the step tolerance

may be too large.

--> Network Training Completed at: 54327977

--> Training Time: 1526.785 seconds

***********************************************

--> SSE: 4.39503

--> RMS: 0.1391272

--> Laplacian Error: 115.7665

--> Scaled Laplacian Error: 0.1910183

--> Largest Absolute Residual: 0.510379

***********************************************

--> Getting Network Weights and Gradients

--> Network Weights and Gradients:

***********************************************

Weights and Gradients

Weight Gradient

6.49062717385272 -7.35781305464078E-07

-6.59528808872046 4.80847787053182E-05

4401.51680674985 -1.85429409555815E-08

0.704338219368497 -8.32580200754921E-07

-0.725623033199978 5.70308208281333E-05

2805.39692819902 -1.44534276811825E-08

-3.93198626371718 -2.13822710907307E-06

4.04824502335685 6.44781336574968E-05

-1787.37723908109 -1.7603927485418E-09

38.3945130188417 -4.36277172935969E-05

37.6336974274678 -2.57936093318423E-05

-0.0378671989334698 7.43008547201402E-07

0.4447658217999 -0.000427699567365199

-0.495282381760965 -0.000323163187876674

-1037.62096685008 1.57344076332679E-08

-37.6465155277179 -7.36849253095175E-05

***********************************************

--> Saving Trained Network into NeuralNetworkEx1.ser

--> Saving Network Trainer into NeuralNetworkTrainerEx1.ser

--> Saving xData into NeuralNetworkxDataEx1.ser

--> Saving yData into NeuralNetworkyDataEx1.ser

Link to C# source.