Example 1: One-Sided Differences

A simple use of NumericalDerivatives

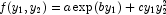

is shown. The gradient of the function  is required for values

is required for values  ,

,  ,

,  ,

,  ,

,  The numerical gradient is compared to the analytic gradient, cast as a 1 by 2 Jacobian:

The numerical gradient is compared to the analytic gradient, cast as a 1 by 2 Jacobian: ![grad(f) = \left[ {\begin{array}{*{20}c} {ab\exp (by_1 ) + cy_2^2 ,} & {2cy_1 y_2 } \\ \end{array} } \right]](eqn/eqn_0335.png) This analytic gradient is expected to approximately agree with the numerical differentiation gradient. Relative agreement should be approximately the square root of machine precision. That is achieved here. Generally this is the most accuracy one can expect using one-sided divided differences.

This analytic gradient is expected to approximately agree with the numerical differentiation gradient. Relative agreement should be approximately the square root of machine precision. That is achieved here. Generally this is the most accuracy one can expect using one-sided divided differences.

using System;

using Imsl.Math;

public class NumericalDerivativesEx1 : NumericalDerivatives.IFunction

{

static int m = 1, n = 2;

static double a, b, c;

// This sets the function value used in forming one-sided

// differences.

public double[] F(int varIndex, double[] y)

{

double[] tmp = new double[m];

tmp[0] = a * Math.Exp(b * y[0]) + c * y[0] * y[1] * y[1];

return tmp;

}

public static void Main(String[] args)

{

double u;

double[] y = new double[n];

double[] scale = new double[n];

double[,] actual = new double[m, n];

double[] re = new double[2];

// Define data and point of evaluation:

a = 2.5e6;

b = 3.4e0;

c = 4.5e0;

y[0] = 2.1e0;

y[1] = 3.2e0;

// Precision for measuring errors

u = Math.Sqrt(2.220446049250313e-016);

// Set scaling:

scale[0] = 1.0e0;

// Increase scale to account for large value of a.

scale[1] = 8.0e3;

// Compute true values of partials.

actual[0, 0] = a * b * Math.Exp(b * y[0]) + c * y[1] * y[1];

actual[0, 1] = 2 * c * y[0] * y[1];

NumericalDerivatives derv =

new NumericalDerivatives(new NumericalDerivativesEx1());

derv.SetScalingFactors(scale);

double[,] jacobian = derv.EvaluateJ(y);

PrintMatrixFormat pmf = new PrintMatrixFormat();

pmf.NumberFormat = "0.00";

new PrintMatrix("Numerical gradient:").Print(pmf, jacobian);

new PrintMatrix("Analytic gradient:").Print(pmf, actual);

// Check the relative accuracy of one-sided differences.

// They should be good to about half-precision.

jacobian[0, 0] = (jacobian[0, 0] - actual[0, 0]) / actual[0, 0];

jacobian[0, 1] = (jacobian[0, 1] - actual[0, 1]) / actual[0, 1];

re[0] = jacobian[0, 0];

re[1] = jacobian[0, 1];

Console.Out.WriteLine("Relative accuracy:");

Console.Out.WriteLine("df/dy_1 df/dy_2");

Console.Out.WriteLine(" {0:F2}u {1:F2}u",

re[0]/u, re[1]/u);

Console.Out.WriteLine("({0:#.###e+00}) ({1:#.###e+00})",

re[0], re[1]);

}

}

Output

Numerical gradient:

0 1

0 10722141696.00 60.48

Analytic gradient:

0 1

0 10722141353.42 60.48

Relative accuracy:

df/dy_1 df/dy_2

2.14u 0.00u

(3.195e-08) (-1.175e-16)

Link to C# source.