NLINLSQ Function (PV-WAVE Advantage)

Solves a nonlinear least-squares problem using a modified Levenberg-Marquardt algorithm.

Usage

result = NLINLSQ(f, m, n)

Input Parameters

f—Scalar string specifying a user-supplied function to evaluate the function that defines the least-squares problem. The f function accepts the following two parameters and returns an array of length m containing the function values at x:

m

m—Number of functions.

x

x—Array length

n containing the point at which the function is evaluated.

m—Number of functions.

n—Number of variables where n ≤ m.

Returned Value

result —The solution x of the nonlinear least-squares problem. If no solution can be computed, NULL is returned.

Input Keywords

Double—If present and nonzero, double precision is used.

XGuess—Array with n components containing an initial guess. Default: XGuess (*) = 0

Jacobian—Scalar string specifying a user-supplied function to compute the Jacobian. This function accepts two parameters and returns an n × m array containing the Jacobian at the point s input point. Note that each derivative ∂fi/∂xj should be returned in the (i, j) element of the returned matrix. The parameters of the function are as follows:

m—

m—Number of equations.

x

x—Array of length

n at which the point Jacobian is evaluated.

XScale—Array with n components containing the scaling vector for the variables. Keyword XScale is used mainly in scaling the gradient and the distance between two points. See keywords Tol_Grad and Tol_Step for more detail. Default: XScale (*) = 1

FScale—Array with m components containing the diagonal scaling matrix for the functions. The ith component of FScale is a positive scalar specifying the reciprocal magnitude of the ith component function of the problem. Default: FScale (*) = 1

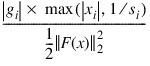

Tol_Grad—Scaled gradient tolerance.

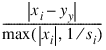

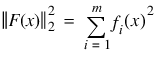

The ith component of the scaled gradient at x is calculated as:

where

,

s = XScale, and

.

Default: Tol_Grad = ε1/2 (ε1/3 in double), where ε is the machine precision

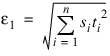

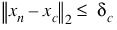

Tol_Step—Scaled step tolerance.

The ith component of the scaled step between two points x and y is computed as:

where s = XScale.

Default: Tol_Step = ε2/3, where ε is the machine precision

Tol_Rfcn—Relative function tolerance. Default: Tol_Rfcn = max(10–10, ε2/3), [max(10–40, ε2/3) in double], where ε is the machine precision

Tol_Afcn—Absolute function tolerance. Default: Tol_Afcn = max(10–20, ε2), [max(10–40, ε2) in double], where ε is the machine precision

Max_Step—Maximum allowable step size. Default: Max_Step = 1000 max(ε1, ε2), where:

s = XScale, and t = XGuess

Trust_Region—Size of initial trust-region radius. Default: based on the initial scaled Cauchy step

N_Digits—Number of good digits in the function. Default: machine dependent

Itmax—Maximum number of iterations. Default: Itmax = 100

Max_Evals—Maximum number of function evaluations. Default: Max_Evals = 400

Max_Jacobian—Maximum number of Jacobian evaluations. Default: Max_Jacobian = 400

Intern_Scale—Internal variable scaling option. With this keyword, the values for XScale are set internally.

Tolerance—Tolerance used in determining linear dependence for the computation of the inverse of JTJ. If Jacobian is specified, Tolerance = 100ε, where ε is the machine precision, is the default; otherwise, SQRT(ε), where ε is the machine precision, is the default.

Output Keywords

Fvec—Name of the variable into which a real array of length m containing the residuals at the approximate solution is stored.

Fjac—Name of the variable into which an array of size m × n containing the Jacobian at the approximate solution is stored.

Rank—Name of the variable into which the rank of the Jacobian is stored.

JTJ_inverse—Name of the variable into which an array of size n × n containing the inverse matrix of JTJ, where J is the final Jacobian, is stored. If JTJ is singular, the inverse is a symmetric g2 inverse of JTJ. (See CHNNDSOL for a discussion of generalized inverses and the definition of the g2 inverse.) See CHNNDSOL for a discussion of generalized inverses and the definition of the g2 inverse.

Input/Output Keywords

Xlb—One dimensional array with n components containing the lower bounds on the variables. Keywords Xlb and Xub must be used together.

Xub—One dimensional array with n components containing the upper bounds on the variables. Keywords Xlb and Xub must be used together.

Discussion

The specific algorithm used in NLINLSQ is dependent on whether the keywords Xlb and Xub are supplied. If keywords Xlb and Xub are not supplied, then the function NLINLSQ is based on the MINPACK routine LMDER by Moré et al. (1980).

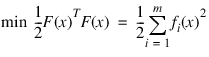

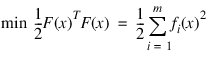

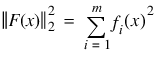

Function NLINLSQ, based on the MINPACK routine LMDER by Moré et al. (1980), uses a modified Levenberg-Marquardt method to solve nonlinear least-squares problems. The problem is stated as follows:

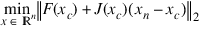

where m ≥ n, F : Rn → Rm and fi (x) is the ith component function of F(x). From a current point, the algorithm uses the trust region approach:

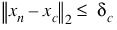

subject to

to get a new point xn. Compute xn as:

xn = xc – (J(xc)T J(xc) + μc I )–1 J(xc)TF(xc)

where μc = 0 if δc ≥ || (J(xc)T J(xc))–1 J(xc)TF(xc) ||2 and μc > 0 otherwise.

The value μc is defined by the function. The vector and matrix F(xc) and J(xc) are the function values and the Jacobian evaluated at the current point xc. This function is repeated until the stopping criteria are satisfied.

The first stopping criterion for NLINLSQ occurs when the norm of the function is less than the absolute function tolerance, Tol_Afcn. The second stopping criterion occurs when the norm of the scaled gradient is less than the given gradient tolerance Tol_Grad. The third stopping criterion for NLINLSQ occurs when the scaled distance between the last two steps is less than the step tolerance Tol_Step. For more details, see Levenberg (1944), Marquardt (1963), or Dennis and Schnabel (1983, Chapter 10).

If keywords Xlb and Xub are supplied, then the function NLINLSQ uses a modified Levenberg-Marquardt method and an active set strategy to solve nonlinear least-squares problems subject to simple bounds on the variables. The problem is stated as follows:

subject to l ≤ x ≤ u where m ≥ n, F : Rn → Rm, and fi(x) is the ith component function of F(x). From a given starting point, an active set IA, which contains the indices of the variables at their bounds, is built. A variable is called a “free variable” if it is not in the active set. The routine then computes the search direction for the free variables according to the formula:

d = – (JTJ + μI)–1 JTF

where μ is the Levenberg-Marquardt parameter, F = F(x), and J is the Jacobian with respect to the free variables. The search direction for the variables in IA is set to zero. The trust region approach discussed by Dennis and Schnabel (1983) is used to find the new point. Finally, the optimality conditions are checked. The conditions are:

||g (xi)|| ≤ ε, li < xi < ui

g (xi) < 0, xi = ui

g (xi) > 0, xi = li

where ε is a gradient tolerance. This process is repeated until the optimality criterion is achieved.

The active set is changed only when a free variable hits its bounds during an iteration or the optimality condition is met for free variables but not for all variables in IA, the active set. In the latter case, a variable that violates the optimality condition will be dropped out of IA. For more detail on the Levenberg-Marquardt method, see Levenberg (1944) or Marquardt (1963). For more detail on the active set strategy, see Gill and Murray (1976).

Since a finite-difference method is used to estimate the Jacobian for some single-precision calculations, an inaccurate estimate of the Jacobian may cause the algorithm to terminate at a noncritical point. In such cases, high-precision arithmetic is recommended. Also, whenever the exact Jacobian can be easily provided, the keyword Jacobian should be used.

Example

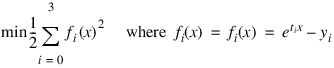

In this example, the nonlinear data-fitting problem found in Dennis and Schnabel (1983, p. 225):

is solved with the data t = [1, 2, 3] and y = [2, 4, 3].

.RUN

; Define the function that defines the least-squares problem.

FUNCTION f, m, x

y = [2, 4, 3]

t = [1, 2, 3]

RETURN, EXP(x(0) * t) - y

END

; Call NLINLSQ.

solution = NLINLSQ('f', 3, 1); Output the results.

PM, solution, Title = 'The solution is:'

; PV-WAVE prints the following:

; The solution is:

; 0.440066

PM, f(m, solution), Title = 'The function values are:'

; PV-WAVE prints the following:

; The function values are:

; -0.447191

; -1.58878

; 0.744159

Informational Errors

MATH_STEP_TOLERANCE—Scaled step tolerance satisfied. The current point may be an approximate local solution, but it is also possible that the algorithm is making very slow progress and is not near a solution or that Tol_Step is too big.

Warning Errors

MATH_LITTLE_FCN_CHANGE—Both the actual and predicted relative reductions in the function are less than or equal to the relative function tolerance.

MATH_TOO_MANY_ITN—Maximum number of iterations exceeded.

MATH_TOO_MANY_FCN_EVAL—Maximum number of function evaluations exceeded.

MATH_TOO_MANY_JACOBIAN_EVAL—Maximum number of Jacobian evaluations exceeded.

MATH_UNBOUNDED—Five consecutive steps have been taken with the maximum step length.

Fatal Errors

MATH_FALSE_CONVERGE—Iterates appear to be converging to a noncritical point.

Version 2017.0

Copyright © 2017, Rogue Wave Software, Inc. All Rights Reserved.

, s = XScale, and

, s = XScale, and  .

.