Given the linear regression model Y = βx + ε, finding the least squares solution  is equivalent to solving the normal equations

is equivalent to solving the normal equations  . Thus the solution for

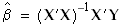

. Thus the solution for  is given by:

is given by:

Pros: | Good performance. Parameter values are recalculated very quickly when adding or removing predictor variables. Model selection performance is best with this calculation method. |

Cons: | Calculation fails when the regression matrix X has less than full rank. (A matrix has less than full rank if the columns of X are linearly dependent.) Results may not be accurate if X is extremely ill-conditioned. |

Pros: | Calculation succeeds for regression matrices of less than full rank. However, calculations fail if the regression matrix contains a column of all 0s. |

Cons: | Slower than the straight QR technique described in “RWLeastSqQRCalc.” |

Pros: | Works on matrices of less than full rank. Produces accurate results when X has full rank, but is highly ill-conditioned. |

Cons: | Slower than the straight QR technique described in “RWLeastSqQRCalc.” |