SURVIVAL_GLM Function (PV-WAVE Advantage)

Analyzes censored survival data using a generalized linear model and estimates survival probabilities and hazard rates for the various parametric models.

Usage

result = SURVIVAL_GLM(n_class, n_continuous, model, x)

Input Parameters

n_class—Number of classification variables.

n_continuous—Number of continuous variables.

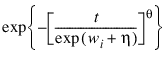

model—Specifies the model used to analyze the data.

0—Exponential

1—Linear hazard

2—Log-normal

3—Normal

4—Log-logistic

5—Logistic

6—Log least extreme value

7—Least extreme value

8—Log extreme value

9—Extreme value

10—Weibull

See the Discussion section for more information about these models.

x—Two-dimensional array of size n_observations by ((n_class + n_continuous) + m) containing data for the independent variables, dependent variable, and optional parameters where n_observations is the number of observations and the optional parameters correspond to keywords Icen, Ilt, Irt, Ifreq, and Ifix.

The columns must be ordered such that the first n_class columns contain data for the class variables, the next n_continuous columns contain data for the continuous variables, and the next column contains the response variable. The final (and optional) m − 1 columns contain optional parameters.

Returned Value

result—An integer value indicating n_coefficients, where n_coefficients is the number of estimated coefficients in the model.

Input Keywords

Double—If present and nonzero, double precision is used.

Icen—The column in

x containing the censoring code for each observation. Possible values are shown in

Icen Values.

Ilt—The column number of x containing the upper endpoint of the failure interval for interval- and left-censored observations.

Irt—The column number of x containing the lower endpoint of the failure interval for interval- and right-censored observations.

Ifreq—The column number of x containing the frequency of response for each observation.

Ifix—Column number in x containing a fixed parameter for each observation that is added to the linear response prior to computing the model parameter. The “fixed” parameter allows one to test hypothesis about the parameters via the log-likelihoods.

Eps—Convergence criterion. Convergence is assumed when maximum relative change in any coefficient estimate is less than Eps from one iteration to the next or when the relative change in the log-likelihood, criterion, from one iteration to the next is less than Eps/100.0. Default: Eps = 0.001

Itmax—Maximum number of iterations. Use Itmax = 0 to compute the Hessian, stored in Covariances, and the Newton step, stored in Last_Step, at the initial estimates (The initial estimates must be input. Use keyword Init_Est). See Example 3. Default: Itmax = 30

No_Intercept—If present and nonzero, there is no intercept in the model. By default, the intercept is automatically included in the model.

Lp_Max—Remove a right- or left-censored observation from the log-likelihood whenever the probability of the observation exceeds 0.995. At convergence, use linear programming to check that all removed observations actually have infinite linear response:

Obs_Status(i) is set to 2 if the linear response is infinite (See keyword Obs_Status). If not all removed observations have infinite linear response, recompute the estimates based upon the observations with finite:

Keyword Lp_Max is the maximum number of observations that can be handled in the linear programming. Setting Lp_Max = n_observations is always sufficient. By default, the function iterates without checking for infinite estimates. Default: No infinity checking; Lp_Max = 0

Var_Effects—One-dimensional array of length n_effects containing the number of variables associated with each effect in the model, where n_effects is the number of effects (sources of variation) in the model. Keywords Var_Effects and Indicies_Effects must be used together.

Indicies_Effects—One-dimensional index array of length Var_Effects(0) + Var_Effects(1) + ... + Var_Effects(n_effects − 1). The first Var_Effects(0) elements give the column numbers of x for each variable in the first effect. The next Var_Effects(1) elements give the column numbers for each variable in the second effect. The last Var_Effects(n_effects − 1) elements give the column numbers for each variable in the last effect. Keywords Indicies_Effects and Var_Effects must be used together.

Init_Est—One-dimensional array containing the initial estimates of the parameters (which requires that the user know the number of coefficients in the model prior to the use of SURVIVAL_GLM). See output keyword Coef_Stat for a description of the “nuisance” parameter, which is the first element of array Init_Est. By default, unweighted linear regression is used to obtain initial estimates.

Max_Class—An upper bound on the sum of the number of distinct values taken on by each classification variable. Internal workspace usage can be significantly reduced with an appropriate choice of Max_Class. Default: Max_Class = n_observations * n_class

Estimation Input Keywords

Est_Nobs—Number of observations for which estimates are to be calculated. Est_Nobs must be positive. Keywords Est_Nobs, Est_Time, Est_Npt, Est_Delta, and Est_Prob must be used together.

Est_Time—Beginning of the time grid for which estimates are desired. Survival probabilities and hazard rates are computed for each covariate vector over the grid of time points Est_Time + i*Est_Delta for i = 0, 1, ..., Est_Npt −1. Keywords Est_Time, Est_Nobs, Est_Npt, Est_Delta, and Est_Prob must be used together.

Est_Npt—Number of points on the time grid for which survival probabilities are desired. Est_Npt must be positive. Keywords Est_Npt, Est_Nobs, Est_Time, Est_Delta, and Est_Prob must be used together.

Est_Delta—Increment between time points on the time grid. Keywords Est_Delta, Est_Nobs, Est_Time, Est_Npt, and Est_Prob must be used together.

Output Keywords

N_Class_Vals—Named variable into which an one-dimensional array of length n_class containing the number of values taken by each classification variable is stored; the ith classification variable has N_Class_Vals(i).

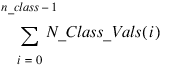

Class_Vals—Named variable into which one-dimensional array of length:

containing the distinct values of the classification variables in ascending order is stored. The first N_Class_Vals(0) elements of Class_Vals contain the values for the first classification variables, the next N_Class_Vals(1) elements contain the values for the second classification variable, etc.

Coef_Stat—Named variable into which a two-dimensional array of size n_coefficients by 4 containing the parameter estimates and associated statistics is stored:

0—Coefficeient estimate.

1—Estimated standard deviation of the estimated coefficient.

2—Asymptotic normal score for testing that the coefficient is zero.

3—The

p-value associated with the normal score in Column 2.

When present in the model, the first coefficient in Coef_Stat is the estimate of the “nuisance” parameter, and the remaining coefficients are estimates of the parameters associated with the “linear” model, beginning with the intercept, if present. Nuisance parameters are as follows:

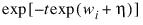

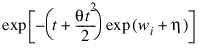

0—No nuisance parameter

1—Coefficient of the quadratic term, term in time,

θ

2–9—Scale parameter,

σ

10—Scale parameter,

θ

Criterion—Named variable into which the optimized criterion is stored. The criterion to be maximized is a constant plus the log-likelihood.

Covariances—Named variable into which a two-dimensional array of size n_coefficients by n_coefficients containing the estimated asymptotic covariance matrix of the coefficients is stored. For Itmax = 0, this is the Hessian computed at the initial parameter estimates.

Means—Named variable into which an one-dimensional array containing the means of the design variables is stored. The array is of length n_coefficients – k if keyword No_Intercept is used, and of length n_coefficients – k – 1 otherwise. Here, k is equal to 0 if model = 0, and equal to 1 otherwise.

Case_Analysis—Named variable into which a two-dimensional array of size n_observations by 5 containing the case analysis below is stored:

0—Estimated predicted value.

1—Estimated influence or leverage.

2—Estimated residual.

3—Estimated cumulative hazard..

4—Non-censored observation: Estimated density at the observation failure time and covariate values. Censored observations: The corresponding estimated probability.

Last_Step—Named variable into which an one-dimensional array of length n_coefficients containing the last parameter updates (excluding step halvings) is stored. Keyword Last_Step is computed as the inverse of the matrix of second partial derivatives times the vector of first partial derivatives of the log-likelihood. When Itmax = 0, the derivatives are computed at the initial estimates.

Obs_Status—Named variable into which an one-dimensional array of length n_observations indcating which observations are included in the extended likelihood is stored.

0—Observation i is in the likelihood

1—Observation

i cannot be in the likelihood because it contains at least one missing value in

x.

2—Observation

i is not in the likelihood. Its estimated parameter is infinite.

Iterations—Named variable into which a two-dimensional array of size,

n by 5 containing information about each iteration of the analysis is stored, where

n is equal to the number of iterations. This is shown in

Column Information.

Nmissing—Named variable into which the number of rows of data that contain missing values in one or more of the following vectors or columns of x is stored: Icen, Ilt, Irt, Ifreq, Ifix, or Indicies_Effects.

Estimation Output Keywords

Est_Prob—Named variable into which a two-dimensional array of size Est_Npt by (2*n_observations + 1) containing the estimated survival probabilities for the covariate groups specified in x is stored. Column 0 contains the survival time. Columns 1 and 2 contain the estimated survival probabilities and hazard rates, respectively, for the covariates in the first row of x. In general, the survival and hazard row i of x is contained in columns 2i – 1 and 2i, respectively, for i = 1, 2, ..., Est_Npt. Keywords Est_Prob, Est_Nobs, Est_Time, Est_Npt, and Est_Delta must be used together.

Est_Xbeta—Named variable into which an one-dimensional array of length n_observations containing the estimated linear response:

for each row of x is stored. To use keyword Est_Xbeta, you must also use keywords Est_Nobs, Est_Time, Est_Npt, Est_Delta, and Est_Prob.

Comments

1. Dummy variables are generated for the classification variables as follows: An ascending list of all distinct values of each classification variable is obtained and stored in

Class_Vals. Dummy variables are then generated for each but the last of these distinct values. Each dummy variable is zero unless the classification variable equals the list value corresponding to the dummy variable, in which case the dummy variable is one. See keyword

Dummy_Method in the

REGRESSORS Function.

2. The “product” of a classification variable with a covariate yields dummy variables equal to the product of the covariate with each of the dummy variables associated with the classification variable.

3. The “product” of two classification variables yields dummy variables in the usual manner. Each dummy variable associated with the first classification variable multiplies each dummy variable associated with the second classification variable. The resulting dummy variables are such that the index of the second classification variable varies fastest.

Discussion

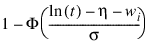

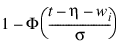

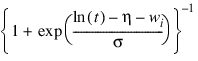

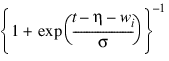

Function SURVIVAL_GLM computes the maximum likelihood estimates of parameters and associated statistics in generalized linear models commonly found in survival (reliability) analysis. Although the terminology used will be from the survival area, the methods discussed have applications in many areas of data analysis, including reliability analysis and event history analysis. These methods can be used anywhere a random variable from one of the discussed distributions is parameterized via one of the models available in SURVIVAL_GLM. Thus, while it is not advisable to do so, standard multiple linear regression can be performed by routine SURVIVAL_GLM. Estimates for any of 10 standard models can be computed. Exact, left-censored, right-censored, or interval-censored observations are allowed (note that left censoring is the same as interval censoring with the left endpoint equal to the left endpoint of the support of the distribution).

Let

η = x

Tβ be the linear parameterization

, where

x is a design vector obtained by SURVIVAL_GLM via function REGRESSORS from a row of

x, and

β is a vector of parameters associated with the linear model. Let T denote the random response variable and S(t) denote the probability that T > t. All models considered also allow a fixed parameter w

i for observation

i (input in column

Ifix of

x). Use of this parameter is discussed below. There also may be nuisance parameters

θ > 0, or

σ > 0 to be estimated (along with

β) in the various models. Let

Φ denote the cumulative normal distribution. The survival models available in SURVIVAL_GLM are listed in

Available Survival Models.

Note that the log-least-extreme-value model is a reparameterization of the Weibull model. Moreover, models 0, 1, 2, 4, 6, 8, and 10 require that T > 0, while all of the remaining models allow any value for T, –∞ < T < ∞.

Each row vector in the data matrix can represent a single observation; or, through the use of vector frequencies, each row can represent several observations. Also note that classification variables and their products are easily incorporated into the models via the usual regression-type specifications.

The constant parameter Wi is input in x and may be used for a number of purposes. For example, if the parameter in an exponential model is known to depend upon the size of the area tested, volume of a radioactive mass, or population density, etc., then a multiplicative factor of the exponential parameter λ= exp (xβ) may be known a priori. This factor can be input in Wi (Wi is the log of the factor).

An alternate use of Wi is as follows: It may be that λ = exp (x1β1 + x2β2), where β2 is known. Letting Wi = x2β2, estimates for β1 can be obtained via SURVIVAL_GLM with the known fixed values for β2. Standard methods can then be used to test hypothesis about β1 via computed log-likelihoods.

Computational Details

The computations proceed as follows:

1. The input parameters are checked for consistency and validity.

Estimates of the means of the “independent” or design variables are computed. Means are computed as:

2. If initial estimates are not provided by the user (see keyword Init_Est), the initial estimates are calculated as follows:

Models 2-10:

a. Kaplan-Meier estimates of the survival probability:

at the upper limit of each failure interval are obtained. (Because upper limits are used, interval- and left-censored data are assumed to be exact failures at the upper endpoint of the failure interval.) The Kaplan-Meier estimate is computed under the assumption that all failure distributions are identical (i.e., all β’s but the intercept, if present, are assumed to be zero).

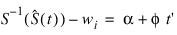

b. If there is an intercept in the model, a simple linear regression is perform predicting:

where t' is computed at the upper endpoint of each failure interval, t' = t in models 3, 5, 7, and 9, and t' = ln (t) in models 2, 4, 6, 8, and 10, and wi is the fixed constant, if present.

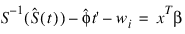

If no intercept is in the model, then α is fixed at zero, and the model:

is fit instead. In this model, the coefficients β are used in place of the location estimate α above. Here:

c. is estimated from the simple linear regression with α = 0.If the intercept is in the model, then in log-location-scale models (models 1-8):

and the initial estimate of the intercept is assumed to be:

In the Weibull model:

and the intercept is assumed to be:

Initial estimates of all parameters β, other than the intercept, are assumed to be zero.

If there is no intercept in the model, the scale parameter is estimated as above, and the estimates:

from Step 2 are used as initial estimates for the β’s.

Models 0 and 1:

For the exponential models (model = 0 or 1), the “average total time on” test statistic is used to obtain an estimate for the intercept. Specifically, let Tt denote the total number of failures divided by the total time on test. The initial estimates for the intercept is then ln(Tt). Initial estimates for the remaining parameters β are assumed to be zero, and if model = 1, the initial estimate for the linear hazard parameter θ is assumed to be a small positive number. When the intercept is not in the model, the initial estimate for the parameter θ is assumed to be a small positive number, and initial estimates of the parameters β are computed via multiple linear regression as in Part A.

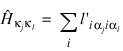

3. A quasi-Newton algorithm is used in the initial iterations based on a Hessian estimate:

where l'iα j is the partial derivative of the ith term in the log-likelihood with respect to the parameter αj, and aj denotes one of the parameters to be estimated.

When the relative change in the log-likelihood from one iteration to the next is 0.1 or less, exact second partial derivatives are used for the Hessian so the Newton-Rapheson iteration is used.

If the initial step size results in an increase in the log-likelihood, the full step is used. If the log-likelihood decreases for the initial step size, the step size is halved, and a check for an increase in the log-likelihood performed. Step-halving is performed (as a simple line search) until an increase in the log-likelihood is detected, or until the step size becomes very small (the initial step size is 1.0).

4. Convergence is assumed when the maximum relative change in any coefficient update from one iteration to the next is less than Eps or when the relative change in the log-likelihood from one iteration to the next is less than Eps/100. Convergence is also assumed after Itmax iterations or when step halving leads to a very small step size with no increase in the log-likelihood.

5. If requested (see keyword Lp_Max), the methods of Clarkson and Jennrich (1988) are used to check for the existence of infinite estimates in:

As an example of a situation in which infinite estimates can occur, suppose that observation j is right-censored with tj > 15 in a normal distribution model in which the mean is:

where xj is the observation design vector. If the design vector xj for parameter βm is such that xjm = 1 and xim = 0 for all i ≠ j, then the optimal estimate of βm occurs at:

leading to an infinite estimate of both βm and ηj. In SURVIVAL_GLM, such estimates can be “computed.”

In all models fit by SURVIVAL_GLM, infinite estimates can only occur when the optimal estimated probability associated with the left- or right-censored observation is 1. If infinity checking is on, left- or right-censored observations that have estimated probability greater than 0.995 at some point during the iterations are excluded from the log-likelihood, and the iterations proceed with a log-likelihood based on the remaining observations. This allows convergence of the algorithm when the maximum relative change in the estimated coefficients is small and also allows for a more precise determination of observations with infinite:

At convergence, linear programming is used to ensure that the eliminated observations have infinite ηi. If some (or all) of the removed observations should not have been removed (because their estimated ηi’s must be finite), then the iterations are restarted with a log-likelihood based upon the finite ηi observations. See Clarkson and Jennrich (1988) for more details.

By default, or when not using keyword Lp_Max (see keyword Lp_Max), no observations are eliminated during the iterations. In this case, the infinite estimates occur, some (or all) of the coefficient estimates:

will become large, and it is likely that the Hessian will become (numerically) singular prior to convergence.

6. The case statistics are computed as follows: Let

Ii (θi ) denote the log-likelihood of the

ith observation evaluated at

θi, let

I'i denote the vector of derivatives of

Ii with respect to all parameters,

denote the derivative of

Ii with respect to

η = xTβ, H denote the Hessian, and

E denote expectation. Then the columns of

Case_Analysis are:

a. Predicted values are computed as E (T/x) according to standard formulas. If model is 4 or 8, and if s ≥ 1, then the expected values cannot be computed because they are infinite.

b. Following Cook and Weisberg (1982), the influence (or leverage) of the ith observation is assumed to be:

This quantity is a one-step approximation of the change in the estimates when the ith observation is deleted (ignoring the nuisance parameters).

c. The “residual” is computed as

.

. d. The cumulative hazard is computed at the observation covariate values and, for interval observations, the upper endpoint of the failure interval. The cumulative hazard also can be used as a “residual” estimate. If the model is correct, the cumulative hazards should follow a standard exponential distribution. See Cox and Oakes (1984).

Function SURVIVAL_GLM computes estimates of survival probabilities and hazard rates for the parametric survival/reliability models when using the Est_* keywords.

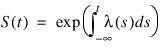

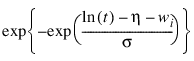

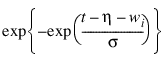

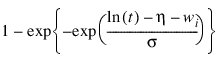

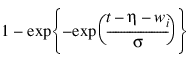

Let η = xTβ be the linear parameterization, where x is the design vector corresponding to a row of x (SURVIVAL_GLM generates the design vector using function REGRESSORS), and β is a vector of parameters associated with the linear model. Let T denote the random response variable and S(t) denote the probability that T > t. All models considered also allow a fixed parameter w (input in column Ifix of x). Use of the keyword is discussed in above. There also may be nuisance parameters θ > 0 or σ > 0. Let λ(t) denote the hazard rate at time t. Then λ(t) and S(t) are related at:

Models 0, 1, 2, 4, 6, 8, and 10 require that T > 0 (in which case assume λ(s) = 0 for s < 0), while the remaining models allow arbitrary values for T, −∞ < T < ∞. The computations proceed in function SURVIVAL_GLM when using the keywords Est_* as follows:

1. The input arguments are checked for consistency and validity.

2. For each row of x, the explanatory variables are generated from the classification and variables and the covariates using function REGRESSORS with keyword Dummy_Method.

3. For each point requested in the time grid, the survival probabilities and hazard rates are computed.

Programming Notes

Indicator (dummy) variables are created for the classification variables using function REGRESSORS (Chapter 3, Regression) using keyword Dummy_Method.

Example 1

This example is taken from Lawless (1982, p. 287) and involves the mortality of patients suffering from lung cancer. An exponential distribution is fit for the model:

η = μ + α i + γ k + β 6 x3 + β 7x4 + β 8x5

where αi is associated with a classification variable with four levels, and γk is associated with a classification variable with two levels. Note that because the computations are performed in single precision, there will be some small variation in the estimated coefficients across different machine environments.

note | To run this example, use the .RUN command and then copy and paste this example into PV‑WAVE. |

PRO print_results, cs

PRINT, ' Coefficient Satistics'

PRINT, ' Coefficient s.e z p'

PM, cs, Format = '(4F14.4)'

END

x = TRANSPOSE([ [1.0, 0.0, 7.0, 64.0, 5.0, 411.0, 0.0] , $

[1.0, 0.0, 6.0, 63.0, 9.0, 126.0, 0.0] , $

[1.0, 0.0, 7.0, 65.0, 11.0, 118.0, 0.0] , $

[1.0, 0.0, 4.0, 69.0, 10.0, 92.0, 0.0] , $

[1.0, 0.0, 4.0, 63.0, 58.0, 8.0, 0.0] , $

[1.0, 0.0, 7.0, 48.0, 9.0, 25.0, 1.0] , $

[1.0, 0.0, 7.0, 48.0, 11.0, 11.0, 0.0] , $

[2.0, 0.0, 8.0, 63.0, 4.0, 54.0, 0.0] , $

[2.0, 0.0, 6.0, 63.0, 14.0, 153.0, 0.0] , $

[2.0, 0.0, 3.0, 53.0, 4.0, 16.0, 0.0] , $

[2.0, 0.0, 8.0, 43.0, 12.0, 56.0, 0.0] , $

[2.0, 0.0, 4.0, 55.0, 2.0, 21.0, 0.0] , $

[2.0, 0.0, 6.0, 66.0, 25.0, 287.0, 0.0] , $

[2.0, 0.0, 4.0, 67.0, 23.0, 10.0, 0.0] , $

[3.0, 0.0, 2.0, 61.0, 19.0, 8.0, 0.0] , $

[3.0, 0.0, 5.0, 63.0, 4.0, 12.0, 0.0] , $

[4.0, 0.0, 5.0, 66.0, 16.0, 177.0, 0.0] , $

[4.0, 0.0, 4.0, 68.0, 12.0, 12.0, 0.0] , $

[4.0, 0.0, 8.0, 41.0, 12.0, 200.0, 0.0] , $

[4.0, 0.0, 7.0, 53.0, 8.0, 250.0, 0.0] , $

[4.0, 0.0, 6.0, 37.0, 13.0, 100.0, 0.0] , $

[1.0, 1.0, 9.0, 54.0, 12.0, 999.0, 0.0] , $

[1.0, 1.0, 5.0, 52.0, 8.0, 231.0, 1.0] , $

[1.0, 1.0, 7.0, 50.0, 7.0, 991.0, 0.0] , $

[1.0, 1.0, 2.0, 65.0, 21.0, 1.0, 0.0] , $

[1.0, 1.0, 8.0, 52.0, 28.0, 201.0, 0.0] , $

[1.0, 1.0, 6.0, 70.0, 13.0, 44.0, 0.0] , $

[1.0, 1.0, 5.0, 40.0, 13.0, 15.0, 0.0] , $

[2.0, 1.0, 7.0, 36.0, 22.0, 103.0, 1.0] , $

[2.0, 1.0, 4.0, 44.0, 36.0, 2.0, 0.0] , $

[2.0, 1.0, 3.0, 54.0, 9.0, 20.0, 0.0] , $

[2.0, 1.0, 3.0, 59.0, 87.0, 51.0, 0.0] , $

[3.0, 1.0, 4.0, 69.0, 5.0, 18.0, 0.0] , $

[3.0, 1.0, 6.0, 50.0, 22.0, 90.0, 0.0] , $

[3.0, 1.0, 8.0, 62.0, 4.0, 84.0, 0.0] , $

[4.0, 1.0, 7.0, 68.0, 15.0, 164.0, 0.0] , $

[4.0, 1.0, 3.0, 39.0, 4.0, 19.0, 0.0] , $

[4.0, 1.0, 6.0, 49.0, 11.0, 43.0, 0.0] , $

[4.0, 1.0, 8.0, 64.0, 10.0, 340.0, 0.0] , $

[4.0, 1.0, 7.0, 67.0, 18.0, 231.0, 0.0]])

n_class = 2

n_continuous = 3

model = 0

icen = 6

irt = 5

lp_max = 40

n_coef = SURVIVAL_GLM(n_class, n_continuous, model, x, $

Icen = icen, Irt = irt, Lp_Max = lp_max, Coef_Stat = cs)

print_results, cs

This results in the following output:

Coefficient Satistics

Coefficient s.e z p

-1.1027 1.3091 -0.8423 0.3998

-0.3626 0.4446 -0.8156 0.4149

0.1271 0.4863 0.2613 0.7939

0.8690 0.5861 1.4825 0.1385

0.2697 0.3882 0.6948 0.4873

-0.5400 0.1081 -4.9946 0.0000

-0.0090 0.0197 -0.4594 0.6460

-0.0034 0.0117 -0.2912 0.7710

Example 2

This example is the same as Example 1, but more keywords are demonstrated.

note | To run this example, use the .RUN command and then copy and paste this example into PV‑WAVE. |

PRO print_results, cs, iter, crit, nmiss

PRINT, ' Coefficient Satistics'

PRINT, ' Coefficient s.e z p'

PM, cs, Format = '(4F14.4)'

PRINT

PRINT, ' Iteration Information'

PRINT, 'Method Iteration Step Size Coef Update ', $

'Log-Likelihood'

PM, iter, Format = '(I3, I10, 2F14.4, F14.1)'

PRINT

PRINT, 'Log-Likelihood =', crit

PRINT

PRINT, 'Number of Missing Value = ', nmiss, $

Format = '(A26, I3)'

END

x = TRANSPOSE([ [1.0, 0.0, 7.0, 64.0, 5.0, 411.0, 0.0] , $

[1.0, 0.0, 6.0, 63.0, 9.0, 126.0, 0.0] , $

[1.0, 0.0, 7.0, 65.0, 11.0, 118.0, 0.0] , $

[1.0, 0.0, 4.0, 69.0, 10.0, 92.0, 0.0] , $

[1.0, 0.0, 4.0, 63.0, 58.0, 8.0, 0.0] , $

[1.0, 0.0, 7.0, 48.0, 9.0, 25.0, 1.0] , $

[1.0, 0.0, 7.0, 48.0, 11.0, 11.0, 0.0] , $

[2.0, 0.0, 8.0, 63.0, 4.0, 54.0, 0.0] , $

[2.0, 0.0, 6.0, 63.0, 14.0, 153.0, 0.0] , $

[2.0, 0.0, 3.0, 53.0, 4.0, 16.0, 0.0] , $

[2.0, 0.0, 8.0, 43.0, 12.0, 56.0, 0.0] , $

[2.0, 0.0, 4.0, 55.0, 2.0, 21.0, 0.0] , $

[2.0, 0.0, 6.0, 66.0, 25.0, 287.0, 0.0] , $

[2.0, 0.0, 4.0, 67.0, 23.0, 10.0, 0.0] , $

[3.0, 0.0, 2.0, 61.0, 19.0, 8.0, 0.0] , $

[3.0, 0.0, 5.0, 63.0, 4.0, 12.0, 0.0] , $

[4.0, 0.0, 5.0, 66.0, 16.0, 177.0, 0.0] , $

[4.0, 0.0, 4.0, 68.0, 12.0, 12.0, 0.0] , $

[4.0, 0.0, 8.0, 41.0, 12.0, 200.0, 0.0] , $

[4.0, 0.0, 7.0, 53.0, 8.0, 250.0, 0.0] , $

[4.0, 0.0, 6.0, 37.0, 13.0, 100.0, 0.0] , $

[1.0, 1.0, 9.0, 54.0, 12.0, 999.0, 0.0] , $

[1.0, 1.0, 5.0, 52.0, 8.0, 231.0, 1.0] , $

[1.0, 1.0, 7.0, 50.0, 7.0, 991.0, 0.0] , $

[1.0, 1.0, 2.0, 65.0, 21.0, 1.0, 0.0] , $

[1.0, 1.0, 8.0, 52.0, 28.0, 201.0, 0.0] , $

[1.0, 1.0, 6.0, 70.0, 13.0, 44.0, 0.0] , $

[1.0, 1.0, 5.0, 40.0, 13.0, 15.0, 0.0] , $

[2.0, 1.0, 7.0, 36.0, 22.0, 103.0, 1.0] , $

[2.0, 1.0, 4.0, 44.0, 36.0, 2.0, 0.0] , $

[2.0, 1.0, 3.0, 54.0, 9.0, 20.0, 0.0] , $

[2.0, 1.0, 3.0, 59.0, 87.0, 51.0, 0.0] , $

[3.0, 1.0, 4.0, 69.0, 5.0, 18.0, 0.0] , $

[3.0, 1.0, 6.0, 50.0, 22.0, 90.0, 0.0] , $

[3.0, 1.0, 8.0, 62.0, 4.0, 84.0, 0.0] , $

[4.0, 1.0, 7.0, 68.0, 15.0, 164.0, 0.0] , $

[4.0, 1.0, 3.0, 39.0, 4.0, 19.0, 0.0] , $

[4.0, 1.0, 6.0, 49.0, 11.0, 43.0, 0.0] , $

[4.0, 1.0, 8.0, 64.0, 10.0, 340.0, 0.0] , $

[4.0, 1.0, 7.0, 67.0, 18.0, 231.0, 0.0]])

n_class = 2

n_continuous = 3

model = 0

icen = 6

irt = 5

lp_max = 40

n_coef = SURVIVAL_GLM(n_class, n_continuous, model, x, $

Icen = icen, Irt = irt, Lp_Max = lp_max, $

N_Class_Vals = ncv, Class_Vals = cv, $

Coef_Stat = cs, Criterion = crit, $

Means = means, Case_Analysis = ca, $

Iterations = iter, Obs_Status = os, Nmissing = nmiss)

print_results, cs, iter, crit, nmiss

This results in the following output:

Coefficient Satistics

Coefficient s.e z p

-1.1027 1.3091 -0.8423 0.3998

-0.3626 0.4446 -0.8156 0.4149

0.1271 0.4863 0.2613 0.7939

0.8690 0.5861 1.4825 0.1385

0.2697 0.3882 0.6948 0.4873

-0.5400 0.1081 -4.9946 0.0000

-0.0090 0.0197 -0.4594 0.6460

-0.0034 0.0117 -0.2912 0.7710

Iteration Information

Method Iteration Step Size Coef Update Log-Likelihood

0 0 NaN NaN -224.0

0 1 1.0000 0.9839 -213.4

1 2 1.0000 3.6034 -207.3

1 3 1.0000 10.1238 -204.3

1 4 1.0000 0.1430 -204.1

1 5 1.0000 0.0117 -204.1

Log-Likelihood = -204.139

Number of Missing Value = 0

Example 3

In this example, the same data and model as example 1 are used, but Itmax is set to zero iterations with model coefficients restricted such that μ = ‑1.25, β6 = −0.6, and the remaining six coefficients are equal to zero. A chi-squared statistic, with 8 degrees of freedom for testing the coefficients is specified as above (versus the alternative that it is not as specified), can be computed, based on the output, as:

where:

is output in Covariances. The resulting test statistic, χ2 = 6.107, based upon no iterations is comparable to likelihood ratio test that can be computed from the log-likelihood output in this example (−206.683) and the log-likelihood output in Example 2 (-204.139).

note | To run this example, use the .RUN command and then copy and paste this example into PV‑WAVE. |

PRO print_results, cs, means, cov, crit, ls

PRINT, ' Coefficient Satistics'

PRINT, ' Coefficient s.e z p'

PM, cs, Format = '(4F14.4)'

PRINT

PRINT, ' Covariate Means'

PRINT, means, Format = '(7F8.2)'

PRINT

PRINT, ' Hessian'

PM, cov, Format = '(8F8.4)'

PRINT

PRINT, 'Log-Likelihood =', crit

PRINT

PRINT, ' Newton_Raphson Step'

PRINT, ls, Format = '(8F8.4)'

END

x = TRANSPOSE([ [1.0, 0.0, 7.0, 64.0, 5.0, 411.0, 0.0] , $

[1.0, 0.0, 6.0, 63.0, 9.0, 126.0, 0.0] , $

[1.0, 0.0, 7.0, 65.0, 11.0, 118.0, 0.0] , $

[1.0, 0.0, 4.0, 69.0, 10.0, 92.0, 0.0] , $

[1.0, 0.0, 4.0, 63.0, 58.0, 8.0, 0.0] , $

[1.0, 0.0, 7.0, 48.0, 9.0, 25.0, 1.0] , $

[1.0, 0.0, 7.0, 48.0, 11.0, 11.0, 0.0] , $

[2.0, 0.0, 8.0, 63.0, 4.0, 54.0, 0.0] , $

[2.0, 0.0, 6.0, 63.0, 14.0, 153.0, 0.0] , $

[2.0, 0.0, 3.0, 53.0, 4.0, 16.0, 0.0] , $

[2.0, 0.0, 8.0, 43.0, 12.0, 56.0, 0.0] , $

[2.0, 0.0, 4.0, 55.0, 2.0, 21.0, 0.0] , $

[2.0, 0.0, 6.0, 66.0, 25.0, 287.0, 0.0] , $

[2.0, 0.0, 4.0, 67.0, 23.0, 10.0, 0.0] , $

[3.0, 0.0, 2.0, 61.0, 19.0, 8.0, 0.0] , $

[3.0, 0.0, 5.0, 63.0, 4.0, 12.0, 0.0] , $

[4.0, 0.0, 5.0, 66.0, 16.0, 177.0, 0.0] , $

[4.0, 0.0, 4.0, 68.0, 12.0, 12.0, 0.0] , $

[4.0, 0.0, 8.0, 41.0, 12.0, 200.0, 0.0] , $

[4.0, 0.0, 7.0, 53.0, 8.0, 250.0, 0.0] , $

[4.0, 0.0, 6.0, 37.0, 13.0, 100.0, 0.0] , $

[1.0, 1.0, 9.0, 54.0, 12.0, 999.0, 0.0] , $

[1.0, 1.0, 5.0, 52.0, 8.0, 231.0, 1.0] , $

[1.0, 1.0, 7.0, 50.0, 7.0, 991.0, 0.0] , $

[1.0, 1.0, 2.0, 65.0, 21.0, 1.0, 0.0] , $

[1.0, 1.0, 8.0, 52.0, 28.0, 201.0, 0.0] , $

[1.0, 1.0, 6.0, 70.0, 13.0, 44.0, 0.0] , $

[1.0, 1.0, 5.0, 40.0, 13.0, 15.0, 0.0] , $

[2.0, 1.0, 7.0, 36.0, 22.0, 103.0, 1.0] , $

[2.0, 1.0, 4.0, 44.0, 36.0, 2.0, 0.0] , $

[2.0, 1.0, 3.0, 54.0, 9.0, 20.0, 0.0] , $

[2.0, 1.0, 3.0, 59.0, 87.0, 51.0, 0.0] , $

[3.0, 1.0, 4.0, 69.0, 5.0, 18.0, 0.0] , $

[3.0, 1.0, 6.0, 50.0, 22.0, 90.0, 0.0] , $

[3.0, 1.0, 8.0, 62.0, 4.0, 84.0, 0.0] , $

[4.0, 1.0, 7.0, 68.0, 15.0, 164.0, 0.0] , $

[4.0, 1.0, 3.0, 39.0, 4.0, 19.0, 0.0] , $

[4.0, 1.0, 6.0, 49.0, 11.0, 43.0, 0.0] , $

[4.0, 1.0, 8.0, 64.0, 10.0, 340.0, 0.0] , $

[4.0, 1.0, 7.0, 67.0, 18.0, 231.0, 0.0]])

n_class = 2

n_continuous = 3

model = 0

icen = 6

irt = 5

lp_max = 40

itmax = 0

init_est = [-1.25, 0.0, 0.0, 0.0, 0.0, -0.6, 0.0, 0.0]

n_coef = SURVIVAL_GLM(n_class, n_continuous, model, x, $

Icen = icen, Irt = irt, Itmax = itmax, $

Lp_Max = lp_max, Init_Est = init_est, $

Coef_Stat = cs, Criterion = crit, $

Covariances = cov, Means = means, Last_Step = ls)

print_results, cs, means, cov, crit, ls

This results in the following output:

Coefficient Satistics

Coefficient s.e z p

-1.2500 1.3773 -0.9076 0.3643

0.0000 0.4288 0.0000 1.0000

0.0000 0.5299 0.0000 1.0000

0.0000 0.7748 0.0000 1.0000

0.0000 0.4051 0.0000 1.0000

-0.6000 0.1118 -5.3652 0.0000

0.0000 0.0215 0.0000 1.0000

0.0000 0.0109 0.0000 1.0000

Covariate Means

0.35 0.28 0.12 0.53 5.65 56.58 15.65

Hessian

1.8969 -0.0906 -0.1641 -0.1681 0.0778 -0.0818 -0.0235 -0.0012

-0.0906 0.1839 0.0996 0.1191 0.0358 -0.0005 -0.0008 0.0006

-0.1641 0.0996 0.2808 0.1264 -0.0226 0.0104 0.0005 -0.0021

-0.1681 0.1191 0.1264 0.6003 0.0460 0.0193 -0.0016 0.0007

0.0778 0.0358 -0.0226 0.0460 0.1641 0.0060 -0.0040 0.0017

-0.0818 -0.0005 0.0104 0.0193 0.0060 0.0125 0.0000 0.0003

-0.0235 -0.0008 0.0005 -0.0016 -0.0040 0.0000 0.0005 -0.0001

-0.0012 0.0006 -0.0021 0.0007 0.0017 0.0003 -0.0001 0.0001

Log-Likelihood = -206.683

Newton_Raphson Step

0.1706 -0.3365 0.1333 1.2967 0.2985 0.0625 -0.0112 -0.0026

Example 4

This example is a continuation of the first example above. Keywords Est_* are used in the function SURVIVAL_GLM to compute the parameter estimates. The example is taken from Lawless (1982, p. 287) and involves the mortality of patients suffering from lung cancer.

note | To run this example, use the .RUN command and then copy and paste this example into PV‑WAVE. |

PRO print_results, ep

PRINT, ' Survival and Hazard Estimates'

PRINT, ' Time S1 H1 S2 H2'

PM, ep, Format = '(F7.2, F10.4, F13.6, F10.4, F13.6)'

END

x = TRANSPOSE([ [1.0, 0.0, 7.0, 64.0, 5.0, 411.0, 0.0] , $

[1.0, 0.0, 6.0, 63.0, 9.0, 126.0, 0.0] , $

[1.0, 0.0, 7.0, 65.0, 11.0, 118.0, 0.0] , $

[1.0, 0.0, 4.0, 69.0, 10.0, 92.0, 0.0] , $

[1.0, 0.0, 4.0, 63.0, 58.0, 8.0, 0.0] , $

[1.0, 0.0, 7.0, 48.0, 9.0, 25.0, 1.0] , $

[1.0, 0.0, 7.0, 48.0, 11.0, 11.0, 0.0] , $

[2.0, 0.0, 8.0, 63.0, 4.0, 54.0, 0.0] , $

[2.0, 0.0, 6.0, 63.0, 14.0, 153.0, 0.0] , $

[2.0, 0.0, 3.0, 53.0, 4.0, 16.0, 0.0] , $

[2.0, 0.0, 8.0, 43.0, 12.0, 56.0, 0.0] , $

[2.0, 0.0, 4.0, 55.0, 2.0, 21.0, 0.0] , $

[2.0, 0.0, 6.0, 66.0, 25.0, 287.0, 0.0] , $

[2.0, 0.0, 4.0, 67.0, 23.0, 10.0, 0.0] , $

[3.0, 0.0, 2.0, 61.0, 19.0, 8.0, 0.0] , $

[3.0, 0.0, 5.0, 63.0, 4.0, 12.0, 0.0] , $

[4.0, 0.0, 5.0, 66.0, 16.0, 177.0, 0.0] , $

[4.0, 0.0, 4.0, 68.0, 12.0, 12.0, 0.0] , $

[4.0, 0.0, 8.0, 41.0, 12.0, 200.0, 0.0] , $

[4.0, 0.0, 7.0, 53.0, 8.0, 250.0, 0.0] , $

[4.0, 0.0, 6.0, 37.0, 13.0, 100.0, 0.0] , $

[1.0, 1.0, 9.0, 54.0, 12.0, 999.0, 0.0] , $

[1.0, 1.0, 5.0, 52.0, 8.0, 231.0, 1.0] , $

[1.0, 1.0, 7.0, 50.0, 7.0, 991.0, 0.0] , $

[1.0, 1.0, 2.0, 65.0, 21.0, 1.0, 0.0] , $

[1.0, 1.0, 8.0, 52.0, 28.0, 201.0, 0.0] , $

[1.0, 1.0, 6.0, 70.0, 13.0, 44.0, 0.0] , $

[1.0, 1.0, 5.0, 40.0, 13.0, 15.0, 0.0] , $

[2.0, 1.0, 7.0, 36.0, 22.0, 103.0, 1.0] , $

[2.0, 1.0, 4.0, 44.0, 36.0, 2.0, 0.0] , $

[2.0, 1.0, 3.0, 54.0, 9.0, 20.0, 0.0] , $

[2.0, 1.0, 3.0, 59.0, 87.0, 51.0, 0.0] , $

[3.0, 1.0, 4.0, 69.0, 5.0, 18.0, 0.0] , $

[3.0, 1.0, 6.0, 50.0, 22.0, 90.0, 0.0] , $

[3.0, 1.0, 8.0, 62.0, 4.0, 84.0, 0.0] , $

[4.0, 1.0, 7.0, 68.0, 15.0, 164.0, 0.0] , $

[4.0, 1.0, 3.0, 39.0, 4.0, 19.0, 0.0] , $

[4.0, 1.0, 6.0, 49.0, 11.0, 43.0, 0.0] , $

[4.0, 1.0, 8.0, 64.0, 10.0, 340.0, 0.0] , $

[4.0, 1.0, 7.0, 67.0, 18.0, 231.0, 0.]])

n_class = 2

n_continuous = 3

model = 0

icen = 6

irt = 5

lp_max = 40

time = 10.0

npt = 10

delta = 20.0

n_coef = SURVIVAL_GLM(n_class, n_continuous, model, x, $

Icen=icen, Irt=irt, Lp_Max=lp_max, N_Class_Vals=nvc, $

Class_Vals=cv, Coef_Stat=cs, Criterion=crit, Means=means, $

Case_Analysis=ca, Obs_Status=os, Iterations=iter, $

Est_Nobs=2, Est_Time=time, Est_Npt=npt, $

Est_Delta=delta, Est_Prob=ep, Est_Xbeta=xb)

print_results, ep

This results in the following output:

Survival and Hazard Estimates

Time S1 H1 S2 H2

10.00 0.9626 0.003807 0.9370 0.006503

30.00 0.8921 0.003807 0.8228 0.006503

50.00 0.8267 0.003807 0.7224 0.006503

70.00 0.7661 0.003807 0.6343 0.006503

90.00 0.7099 0.003807 0.5570 0.006503

110.00 0.6579 0.003807 0.4890 0.006503

130.00 0.6096 0.003807 0.4294 0.006503

150.00 0.5649 0.003807 0.3770 0.006503

170.00 0.5235 0.003807 0.3310 0.006503

190.00 0.4852 0.003807 0.2907 0.006503

Warning Errors

STAT_CONVERGENCE_ASSUMED_1—Too many step halvings. Convergence is assumed.

STAT_CONVERGENCE_ASSUMED_2—Too many step iterations. Convergence is assumed.

STAT_NO_PREDICTED_1—“estimates(0)” > 1.0. The expected value for the log logistic distribution (“model” = 4) does not exist. Predicted values will not be calculated.

STAT_NO_PREDICTED_2—“estimates(0)” > 1.0. The expected value for the log extreme value distribution(“model” = 8) does not exist. Predicted values will not be calculated.

STAT_NEG_EIGENVALUE—The Hessian has at least one negative eigenvalue. An upper bound on the absolute value of the minimum eigenvalue is # corresponding to variable index #.

STAT_INVALID_FAILURE_TIME_4—“x(#)(“Ilt”= #)” = # and “x(#)

(“Irt”= #)” = #. The censoring interval has length 0.0. The censoring code for this observation is being set to 0.0.

Fatal Error

STAT_MAX_CLASS_TOO_SMALL—The number of distinct values of the classification variables exceeds “Max_Class” = #.

STAT_TOO_FEW_COEF—Init_Est is specified, and “Init_Est” = #. The model specified requires # coefficients.

STAT_TOO_FEW_VALID_OBS—“n_observations” = # and “Nmissing” = #. “n_observations”(”Nmissing” must be greater than or equal to 2 in order to estimate the coefficients.

STAT_SVGLM_1—For the exponential model (“model” = 0) with “n_effects” = # and no intercept, “n_coef” has been determined to equal 0. With no coefficients in the model, processing cannot continue.

STAT_INCREASE_LP_MAX—Too many observations are to be deleted from the model. Either use a different model or increase the workspace.

STAT_INVALID_DATA_8—“Class_Vals(#)” = #. The number of distinct values for each classification variable must be greater than one.

Version 2017.0

Copyright © 2017, Rogue Wave Software, Inc. All Rights Reserved.

denote the derivative of Ii with respect to η = xTβ, H denote the Hessian, and E denote expectation. Then the columns of Case_Analysis are:

denote the derivative of Ii with respect to η = xTβ, H denote the Hessian, and E denote expectation. Then the columns of Case_Analysis are:

.

.